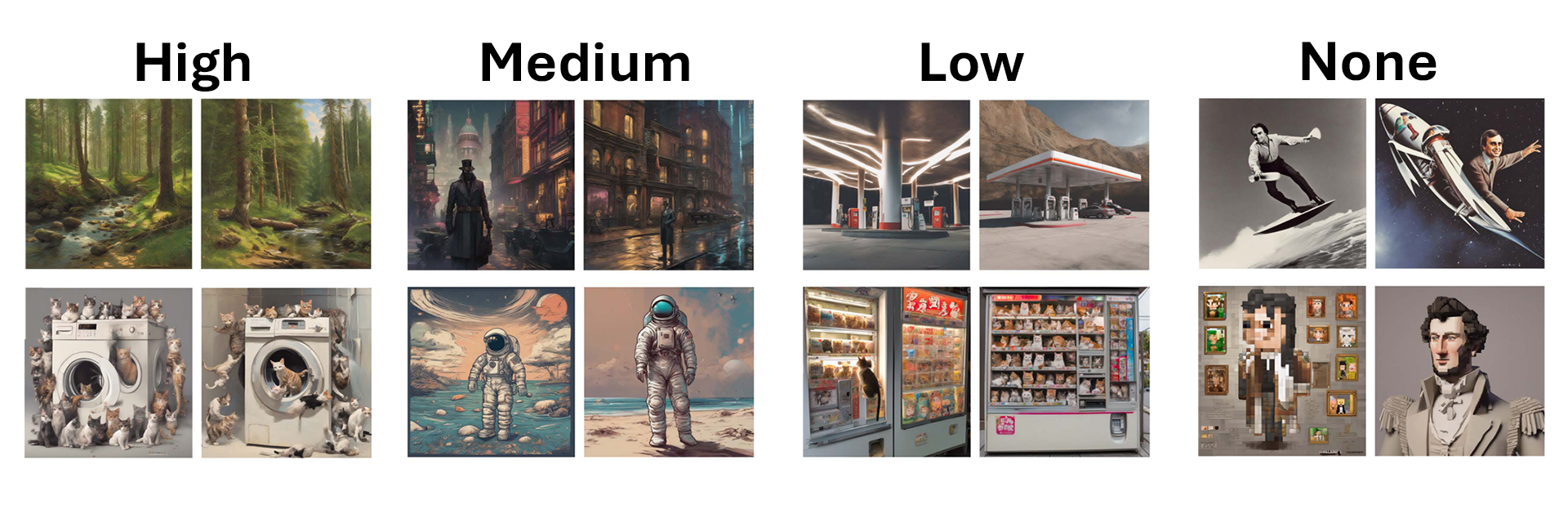

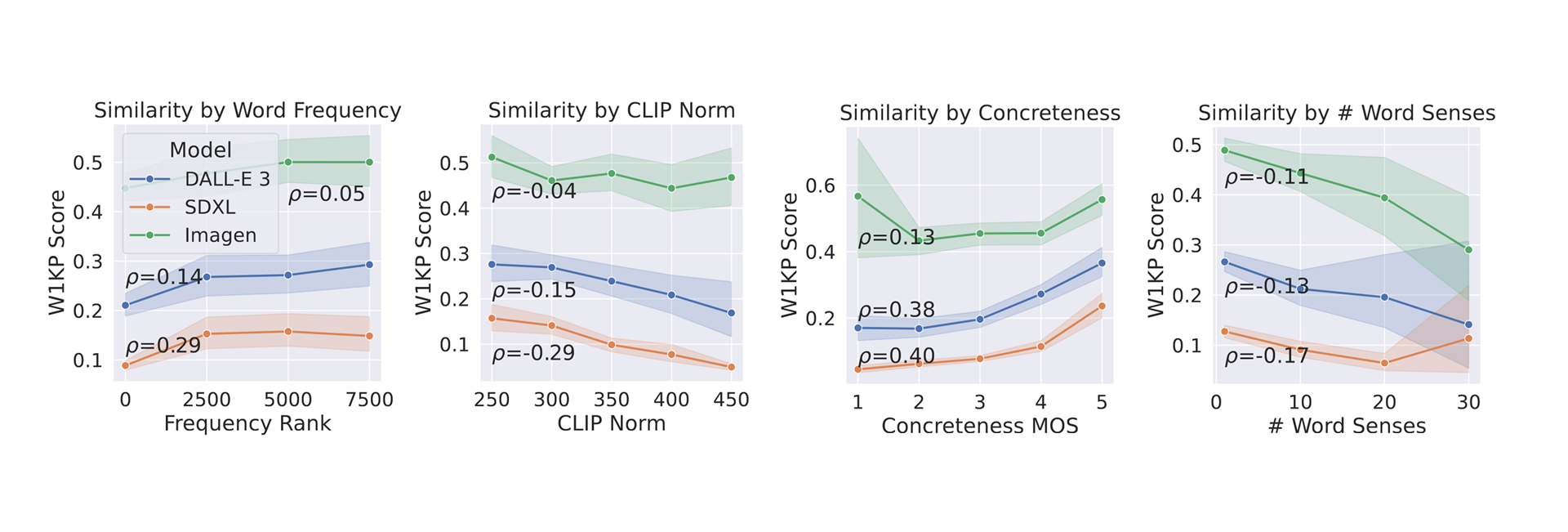

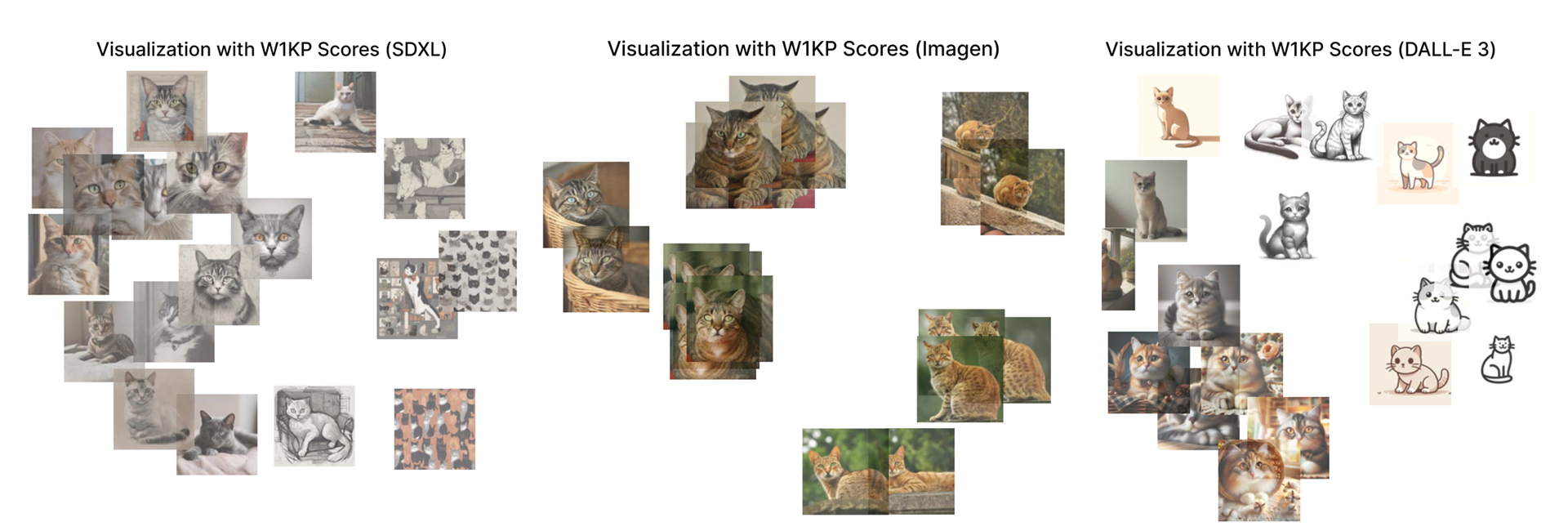

Diffusion models are the state of the art in text-to-image generation, but their perceptual variability remains understudied. In this paper, we examine how prompts affect image variability in black-box diffusion-based models. We propose W1KP, a human-calibrated measure of variability in a set of images, bootstrapped from existing image-pair perceptual distances. Current datasets do not cover recent diffusion models, thus we curate three test sets for evaluation. Our best perceptual distance outperforms nine baselines by up to 18 points in accuracy, and our calibration matches graded human judgements 78% of the time. Using W1KP, we study prompt reusability and show that Imagen prompts can be reused for 10-50 random seeds before new images become too similar to already generated images, while Stable Diffusion XL and DALL-E 3 can be reused 50-200 times. Lastly, we analyze 56 linguistic features of real prompts, finding that the prompt's length, CLIP embedding norm, concreteness, and word senses influence variability most. As far as we are aware, we are the first to analyze diffusion variability from a visuolinguistic perspective.

@inproceedings{tang2024words,

title = "Words Worth a Thousand Pictures: Measuring and Understanding Perceptual Variability in Text-to-Image Generation",

author = "Tang, Raphael and

Zhang, Crystina and

Xu, Lixinyu and

Lu, Yao and

Li, Wenyan and

Stenetorp, Pontus and

Lin, Jimmy and

Ture, Ferhan",

booktitle = "Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing",

year = "2024",

url = "https://aclanthology.org/2024.emnlp-main.311",

pages = "5441--5454",

}